The newest AlphaGo mastered the game with no human input

AlphaGo Zero is a major breakthrough that has earned DeepMind’s research another spot in the scientific journal Nature.

“The most striking thing for me is we don’t need any human data anymore”, says Demis Hassabis, the CEO and co-founder of DeepMind. “If we can make the same progress on these problems that we have with AlphaGo, it has the potential to drive forward human understanding and positively impact all of our lives”.

Singh does not think we should be anxious about the increasing abilities of artificial intelligence compared to what we can do as humans. A computer first beat the world checkers champion in 1994. A computer beat out world chess champion Gary Kasparov two decades ago.

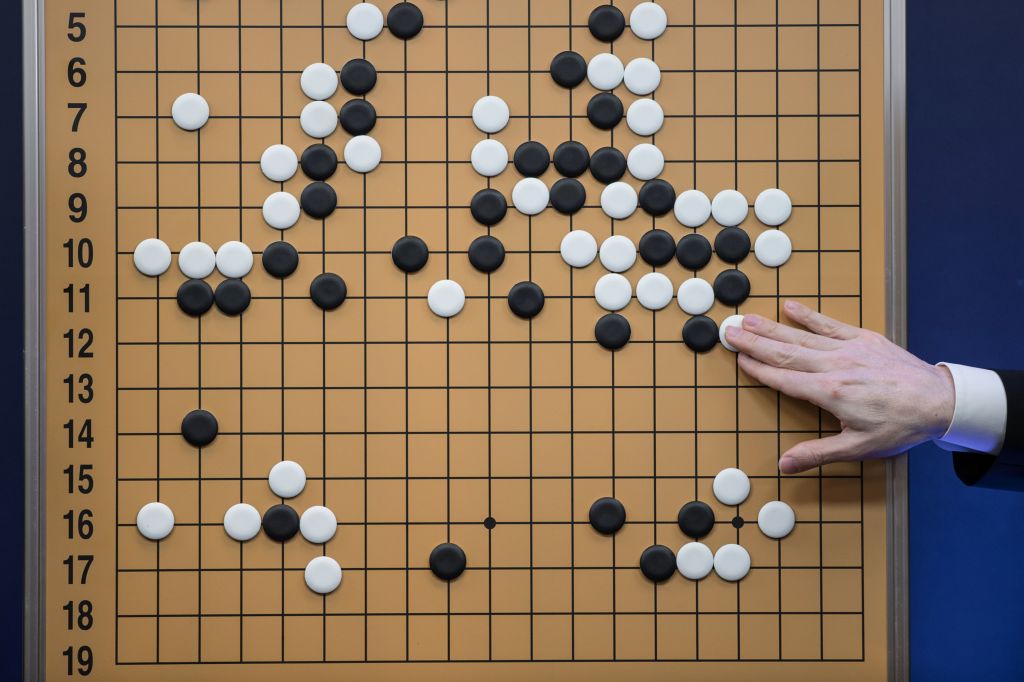

Instead of learning from human-played games, Silver says it was given the simple rules for Go and asked to play itself. There are more potential arrangements of the pieces than atoms in the known universe, making it impossible for a computer to play the game by exhaustively simulating all moves and outcomes. It is actually way more than that.

DeepMindDavid Silver, DeepMind’s lead AlphaGo researcher, explained how AlphaGo Zero learns completely tabula rasa (from scratch). AlphaGo took what it learned from humans and did it better. Earlier this year, AlphaGo Master defeated 60 of the world’s top Go players online. Earlier versions of AlphaGo used a “policy network” to select the next move to play and a “value network” to predict the victor of the game from each position. This process is called machine learning. It is the same kind of technique that lets Facebook identify photos of your friends. Instead, it selectively prunes branches by deciding which paths seem most promising.

“And at the end of each of these games it actually trains a new neural network”.

Unlike the previous versions of AlphaGo, the new “AlphaGo Zero” AI learns how to play Go without any human data. This is despite only training for three days, compared to several months for its predecessor.

The computational strategy that underlies AlphaGo Zero is effective primarily in situations in which you have an extremely large number of possibilities and want to find the optimal one.

Describing it in Nature the team says it learns exclusively from the games it plays against itself. “The architecture is simpler, yet more powerful, than previous versions”, he says.

To achieve Go supremacy, AlphaGo Zero simply played against itself, randomly at first. The only human input is to tell it the rules of the game. AlphaGo Zero is likely to change that.

“It left open this question: Do we really need the human expertise?” says Satinder Singh, a professor specializing in reinforcement learning at the University of MI, who wrote a review of the new paper in Nature. It’s far more complicated than chess. To train itself, AlphaGo Zero had to be limited to a problem in which clear rules limited its actions and clear rules determined the outcome of a game. The program starts with the current board and considers the possible moves. It started off trying greedily to capture stones, as beginners often do, but after three days it had mastered complex tactics used by human experts. But by the 70-hour mark, it had developed a mature, sophisticated style.

Responding to the announcement in a separate editorial for Nature, Satinder Singh, the director of the University of Michigan’s AI lab, said Zero “massively outperforms the already superhuman AlphaGo” and could be one of the biggest AI advances so far. Ultimately, it favoured other, previously unknown joseki. After a few days’ practice, AlphaGo Zero trounced AlphaGo Lee 100 games to none, researchers report in the October 19 Nature.

The showdown was a David vs Goliath match-up.

The fact that human-guided AlphaGo that defeated Sedol couldn’t muster a single win against self-taught AlphaGo Zero had researchers arriving at some rather mind-blowing, and perhaps spine-chilling conclusions.

He adds: “They’re not putting explicit declarative knowledge of things other than the rules of Go in there, but there’s a lot of implicit knowledge that the programmers have about how to construct machines to play problems like Go”.

AlphaGo Zero’s success bodes well for AI’s mastery of games, Etzioni says.