Visual search engine Lens comes to all Google Photos users

In Android P, users can expect messaging app notifications to become even more functional than what the current state is. In November of 2018, all apps submitted to the Google Play Store will need to target Android Oreo or newer.

For those unaware, Big Data is an extremely large set of information that is analyzed computationally to reveal patterns, trends, and associations, especially relating to human behavior and interactions. It uses machine learning to do that and makes our lives easier.

The company is offering the download to developers only (this ain’t no public preview) and are at pains to say it isn’t intended daily or consumer use right now.

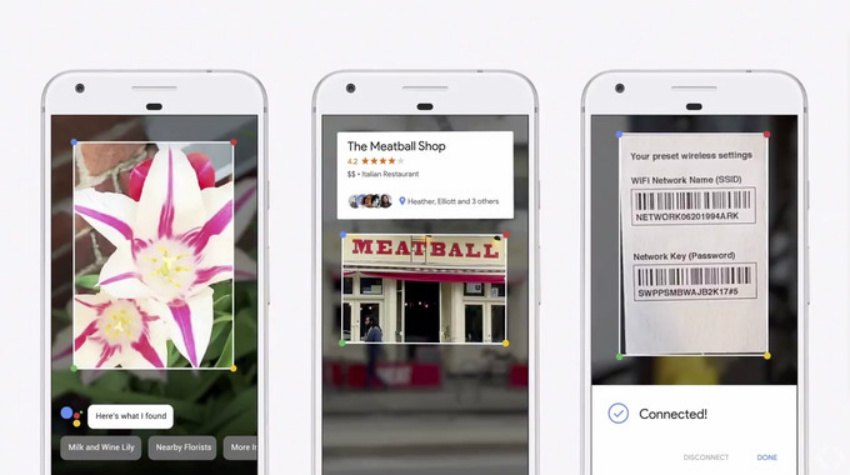

With the lens, you’ll be able to do things like copy text, scan QR codes and identify famous landmarks.

Still, Lens is not ideal, and it will need some time to mature. It was able to throw the correct guess after three attempts. With Pixel 2, the search giant integrated stronger AI abilities in the camera’s feature.

Google has also improved visibility and function in notifications with Android P. The new Messaging Style notification will highlight who is messaging and how the user can reply. There is no word about the possible release date. Google had announced the feature at the MWC. Or, if you photograph something with an address on it, Lens will identify the address and pull up Google Maps directions, saving you from having to type it in. When it comes to landmarks or any place, Google Lens depends on what Google can recognize.

Google Lens has been rolled out to all the Android smartphones and tablets inside the Google Photos app. It is the company’s own visual search engine which scans through the internet to recognise what is in the image that has been taken by the user took.

Whatever you capture while using Google Lens, you can check it again whenever you want. Lens can take up from there as it is intelligent enough to isolate the main object in the photo and then extract more info on it from the web.